DeepLearning Hero

RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs

2 years ago - 14:06

Efficient NLP

Rotary Positional Embeddings: Combining Absolute and Relative

2 years ago - 11:17

![How Rotary Position Embedding Supercharges Modern LLMs [RoPE]](/vi/SMBkImDWOyQ/mqdefault.jpg)

Jia-Bin Huang

How Rotary Position Embedding Supercharges Modern LLMs [RoPE]

1 year ago - 13:39

Outlier

Rotary Positional Embeddings Explained | Transformer

4 months ago - 20:28

JakZee

Rotary Position Embedding explained deeply (w/ code)

1 year ago - 23:26

Vizuara

Rotary Positional Encodings | Explained Visually

8 months ago - 34:38

Umar Jamil

LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

2 years ago - 1:10:55

Vuk Rosić

Rotary Positional Embeddings & Rotation Matrix + Python LLM code

1 year ago - 11:05

ExplainingAI

Rotary Positional Embeddings Explained | How Transformers Encode Relative Position

2 days ago - 23:06

Mr. Gyula Rabai

Large Language Models (LLM) - Part 5/16 - RoPE (Positional Encoding) in AI

1 year ago - 4:17

BrainDrain

How positional encoding works in transformers?

2 years ago - 5:36

Zachary Huang

Give me 30 min, I will make RoPE click forever

1 month ago - 29:08

Gabriel Mongaras

RoFormer: Enhanced Transformer with Rotary Position Embedding Explained

2 years ago - 39:52

Discover AI

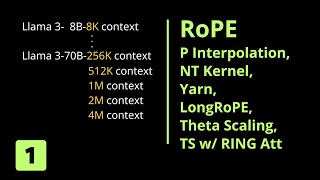

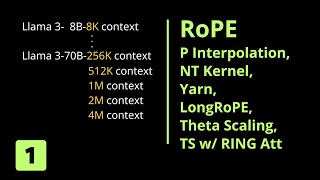

RoPE Rotary Position Embedding to 100K context length

1 year ago - 39:56

Sciencing The Data

What Rotary Positional Embeddings (RoPE) don’t want you to know

3 months ago - 12:03

CodeEmporium

Why Sine & Cosine for Transformer Neural Networks

2 years ago - 0:51

Keyur

DoPE: Denoising Rotary Position Embedding

13 days ago - 10:49

Stanford Online

Stanford XCS224U: NLU I Contextual Word Representations, Part 3: Positional Encoding I Spring 2023

2 years ago - 13:02

Gabriel Mongaras

Round and Round We Go! What makes Rotary Positional Encodings useful?

1 year ago - 32:31

![How Attention Got So Efficient [GQA/MLA/DSA]](/vi/Y-o545eYjXM/mqdefault.jpg)

Jia-Bin Huang

How Attention Got So Efficient [GQA/MLA/DSA]

1 month ago - 29:02

Mehdi Hosseini Moghadam

🔥 Master RoPE (Rotary Positional Encoding) - The SECRET Behind GPT & LLaMA's Success! Code and math

6 months ago - 14:37

Learn With Jay

Positional Encoding in Transformers | Deep Learning

1 year ago - 25:54

![[한글자막] RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs](/vi/JF4PYztNHcg/mqdefault.jpg)

WTF_Zone

[한글자막] RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs

2 years ago - 14:07

Md Mishfaq Ahmed

The secret sauce behind ROPE (Rotary Positional embedding)

10 months ago - 2:01

Serrano.Academy

How do Transformer Models keep track of the order of words? Positional Encoding

1 year ago - 9:50

Pramod Goyal

Positional Encoding | How LLMs understand structure

11 months ago - 9:10

Tales Of Tensors

I Followed a Token Through the Transformers Architecture (Every Step)

2 weeks ago - 8:17

Arxflix

RoFormer: Transforming Transformers with Rotary Positional Embeddings

1 year ago - 3:34

Jonas Almeida

RoFormer: enhanced transformer with rotary position embedding

1 year ago - 10:23

Umar Jamil

Coding LLaMA 2 from scratch in PyTorch - KV Cache, Grouped Query Attention, Rotary PE, RMSNorm

2 years ago - 3:04:11

AI Papers

VideoRoPE Enhancing Video Rotary Position Embedding for LLMs

10 months ago - 14:28

![How Rotary Position Embedding Supercharges Modern LLMs [RoPE]](/vi/SMBkImDWOyQ/mqdefault.jpg)

![How Attention Got So Efficient [GQA/MLA/DSA]](/vi/Y-o545eYjXM/mqdefault.jpg)

![[한글자막] RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs](/vi/JF4PYztNHcg/mqdefault.jpg)