HuggingFace

Transformer models: Decoders

4 years ago - 4:27

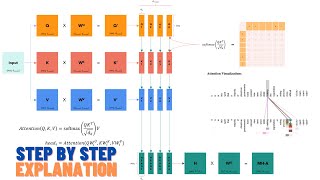

CodeEmporium

Blowing up Transformer Decoder architecture

2 years ago - 25:59

Efficient NLP

Which transformer architecture is best? Encoder-only vs Encoder-decoder vs Decoder-only models

2 years ago - 7:38

HuggingFace

Transformer models: Encoder-Decoders

4 years ago - 6:47

The AI Hacker

Illustrated Guide to Transformers Neural Network: A step by step explanation

5 years ago - 15:01

Learn With Jay

Decoder Architecture in Transformers | Step-by-Step from Scratch

8 months ago - 41:29

Luke Ditria

Decoder-Only Transformer for Next Token Prediction: PyTorch Deep Learning Tutorial

1 year ago - 15:11

CampusX

Transformer Decoder Architecture | Deep Learning | CampusX

1 year ago - 48:26

codebasics

Transformers Explained | Simple Explanation of Transformers

11 months ago - 57:31

CodeEmporium

Transformer Decoder coded from scratch

2 years ago - 39:54

IBM Technology

What are Transformers (Machine Learning Model)?

3 years ago - 5:51

Umar Jamil

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

2 years ago - 58:04

3Blue1Brown

Attention in transformers, step-by-step | Deep Learning Chapter 6

1 year ago - 26:10

CodeEmporium

Blowing up the Transformer Encoder!

2 years ago - 20:58

Wisdom Bird

🔍 Understanding the Transformer Decoder: How AI Generates Text! 🚀

9 months ago - 1:52

CodeEmporium

Decoder training with transformers

2 years ago - 0:59

IVIAI Plus

How to Use Minitron-8B-Base for Efficient Language Modeling

1 year ago - 0:51

Google Cloud Tech

Transformers, explained: Understand the model behind GPT, BERT, and T5

4 years ago - 9:11

Learn AI with Joel Bunyan

Transformer : Decoder | Attention is all you need | Natural Language processing | Joel Bunyan P.

2 years ago - 16:54

CodeIgnite

blowing up transformer decoder architecture

11 months ago - 3:10

UofU Data Science

Transformer (Decoder); Pretraining & Finetuning

1 year ago - 1:19:28

Raviteja Ganta

Inputs and Outputs in transformer decoder

4 years ago - 0:18

Learn AI with Joel Bunyan

Let's code the Transformer Decoder in PyTorch | Transformer Neural Networks | Joel Bunyan P.

2 years ago - 37:07

CodeEmporium

Why masked Self Attention in the Decoder but not the Encoder in Transformer Neural Network?

2 years ago - 0:45